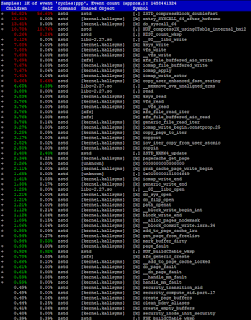

So with my build I've tried a few things. I've tried modifying the make files to adjust the optimization levels ( default O3) to O2 and as you might expect, that killed performance. I wanted to just try to see what affect it would have had on the program.

Alternatively I tried to modify the hot function which was a switch case. It worked just fine. With both the variants in the code that I tired.

I also tried to hunt down the abundant amount of syscalls but it seemed to be a futal attempt to actually change them. They were all needed for each call sadly. (Who would have thought facebook to make super optimized code)

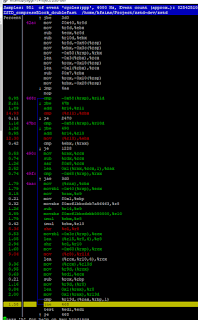

size_t ZSTD_compressBlock_doubleFast(

ZSTD_matchState_t* ms, seqStore_t* seqStore, U32 rep[ZSTD_REP_NUM],

void const* src, size_t srcSize)

{

const U32 mls = ms->cParams.searchLength;

//Remove && mls < 8

return (mls > 3 && mls < 8) ? ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, mls, ZSTD_noDict) : ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 3, ZSTD_noDict);

/*switch(mls)

{

default: // includes case 3

case 4 :

return ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 4, ZSTD_noDict);

case 5 :

return ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 5, ZSTD_noDict);

case 6 :

return ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 6, ZSTD_noDict);

case 7 :

return ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 7, ZSTD_noDict);

case 8 :

}*/

}

The above is the code I focused my efforts on. That was the whole block of code that took up the entire 56%+ run time of the program while doing it's compressions.

return (mls > 3 && mls < 8) ? ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, mls, ZSTD_noDict) : ZSTD_compressBlock_doubleFast_generic(ms, seqStore, rep, src, srcSize, 3, ZSTD_noDict);

This line here was supposed to help minimize the operations needed cutting out the whole switch statement as a whole.

return (mls > 3)

Additionally I tried with the above variant of the clause to yet again cute back on operations.

All of these changes built fine and passed smoke tests and also gave me all the proper results with out a problem.

As for testing goes with the project. I've been lucky in the sense of nothing has broken when I build and tested anything. Every modification I tried build successfully and passed the smoke tests that came with the package.

With the smoke tests cleared and the build successful I then had to run tests on my actual test data! So I build a script to run the application 50 times. and I ran this a few several times in each iteration . I was quite sad to see the results.

Turns out that even though what looks via code to cut back the operations needed for the function to work. after running several hundred tests. It seemed that each iteration had 0 affect. This might be due to the O3 settings just absolutely going over board and even optimizing the switch statement.

With in reasonable variance, the resumes of the system stayed between 0.08ms and 0.05ms (This was the test data whit 150 photos). This result was consistent across all modifications and the original build.

As far as how useful this might be. I think it is a is somewhat useful to know. Facebook did a bang up job in this software. It's super fast like the advertise and honestly it's well build. It really shows that there isn't a whole lot you can really do to the code to push it better. I'm guessing someone who might be better and some software engineer might be able to tell me otherwise but to the best of my ability I cannot push the software to be more optimized.

This is all very repeatable. Super easy to repeat the results that I got from my testings.

Analysis:

Benchmarking, it's been a lot of running a script to stuff data into the program and run it over and over and over. I've yielded all the same results from everything that was done. Nothing seemed to improve anything and just kept giving me the same result (minus o2). My guess is maybe that the data I used could have actually only ever triggered the default method. This is just an assumption and I wouldn't know how to force trigger the higher values for the method.

I think the methods that I used to not only attempt to optimize the software and test the software was reasonable. It was very consistent. using the same data all the way through. and testing the specific section that lit up like a Christmas tree. Things were consistent and specific. I think it was a very safe way to go about working and testing.

As far as pushing this upstream I wouldn't bother due to the lack of improvement. There isn't any reason to add non-optimized code upstream.