Build:

I was able to build the Zstandard software on the yaggi server with out any issues. I installed it locally to my directory just to play it safe as far as messing up the system or other files goes.

I tested several times with the software to compress my own files and it worked just fine.

The software did pass it's smoke test! It came with a make check file to run and it yield clean positive results.

As well the target system is the yaggi server which is an x86_64 architecture server.

Test Setup:

As far as my test data goes I have collected a few screen shots that I had sitting on my phone as the test data. A selection of 15 photos. I plan to multiply that quantity by 10 giving me 150 photos to process which should and has shown a reasonable time to compress. I'm simply just copying the same 15 files 10 times over for the larger quantity of test data. This way I can know it is at least consistent.

Benchmarks:

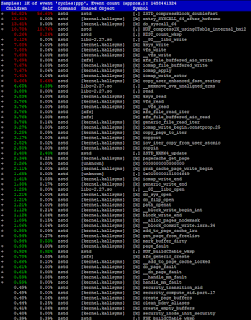

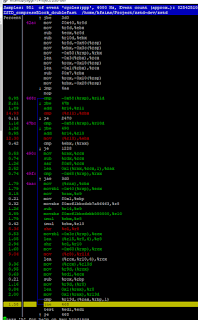

For the benchmarks I was able to clock about 0.45 seconds to compress 150 photos (which is super quick - good job Facebook). I ran theses tests well over 10 times both with the small 15 sample size and the 150 sample size. It simple just multiplied the time it took by 10. Which is what I was expecting. That showed to me that there was nothing fancy happening with the larger amount of data. Just a static system for that data set multiplied. So this was very repeatable and you should yield the same results within reasonable times just due to the nature of server loads. Now out side of the times, I ran perf and the and found out that with the 15 photo sample the compression took less time than the overhead of the system setting things up. When I switched to the 150 sample the opposite happened. Which makes sense simply due to it taking 10 times longer to compress with the data being 10 times larger. The function was actually a constructor. As see the "compressBlock_doublefast" That is the main compression system doing the heavy lifting for the program. The rest of the red in the photo below you see is mostly just system set up! This was super consistent across multiple tests, even after warming up the cache.